Using a MIDI controller to mix Mac application volumes

For a while, I've wanted control over the volume of individual macOS applications, using a physical controller. This would allow me to turn Spotify down when I'm concentrating, or turn up a Slack call if I'm struggling to hear. I've seen this done in Windows, but never in Mac.

I recently found a solution, using the open source BackgroundMusic project, and my own code to interface with a MIDI device. In this post, I'll share how I did it.

BackgroundMusic

This open source project provides the application level control I was looking for. There's a great look at the internals here, and while understanding those isn't needed for the rest of this post, they're interesting regardless. What matters is that this provides the audio driver and UI code for mixing applications.

The Controller

As the name suggests, MIDI controllers all use the same protocol, so the choice of controller isn't too important. I chose the Behringer X-TOUCH MINI, available on Amazon. It only costs around £35 ($43.22 USD at the time of writing), which I see as a fairly reasonable price. I also like the size, which works well beside my MacBook on my desk.

A proof of concept, with Web MIDI and AppleScript

I only have a small amount of Objective-C experience, so where possible, I wanted to stay in languages I was familiar with. Learning new languages is fun, but I decided that working with audio drivers in an unfamiliar language might be a bit too ambitious!

With that in mind, I turned to Web MIDI, an API exposing MIDI devices to JavaScript. This has fairly limited support, unfortunately, but has been available since 2015 in Chrome. I played around, taking a lot of inspiration from Smashing Magagzine's brilliant article, and was able to read data coming from my device. The following code connects to my controller and tells the browser to log any messages it sends:

const midiAccess = await navigator.requestMIDIAccess();

const input = midiAccess.inputs.get("-511226250");

input.onmidimessage = console.log;

Using the above code, here's an example of the output I saw:

MIDIMessageEvent {isTrusted: true, data: Uint8Array(3), type: "midimessage", target: MIDIInput currentTarget: MIDIInput, …}

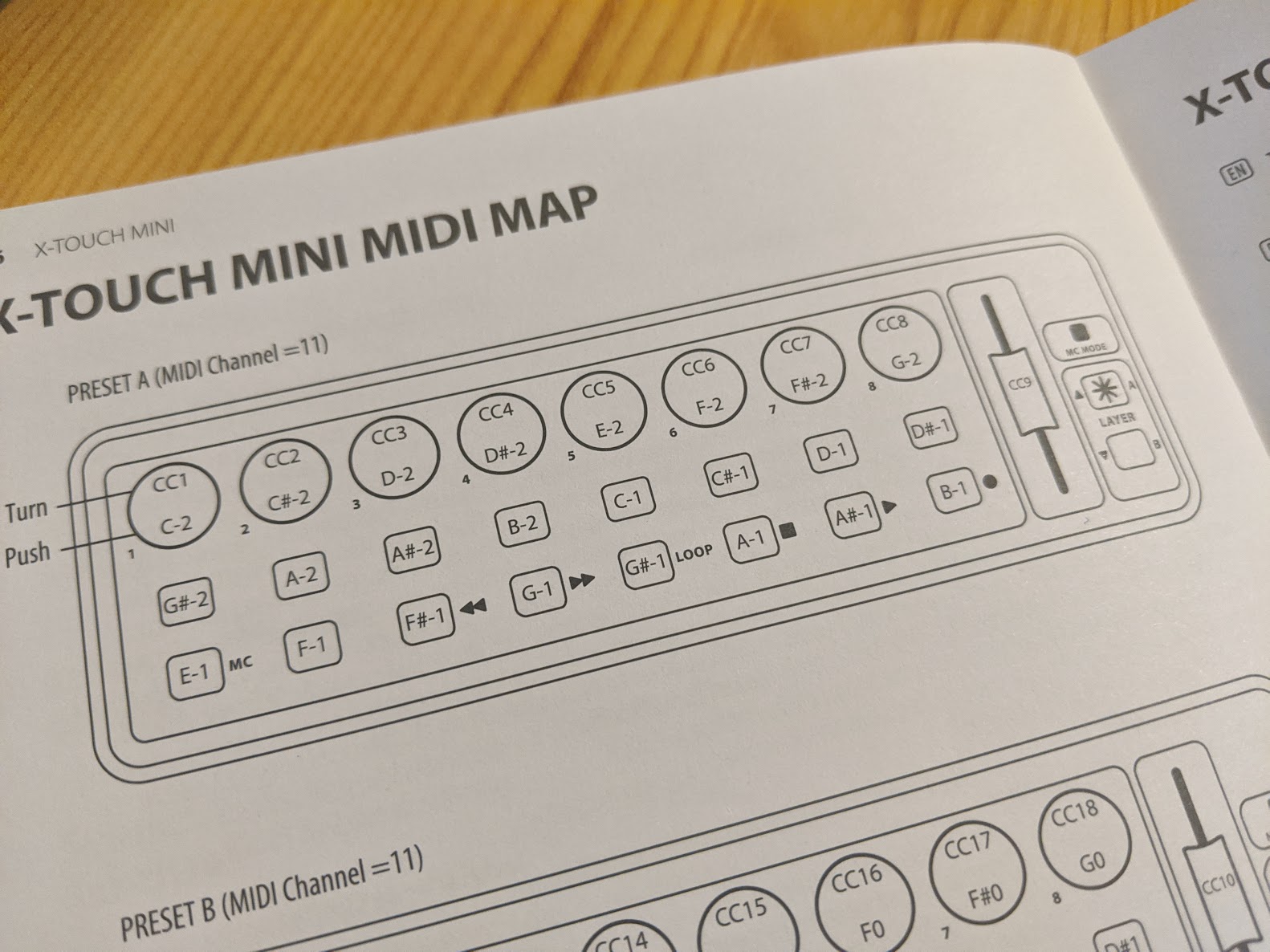

The data array contains the main content of the message. For example, when moving the slider on the far right of the device, one possible value is [186, 9, 52]. The first value, 186, tells us that this is a control change signal, used in MIDI to denote that the value of an input has changed. 9 indicates that the input labeled 9, in this case the slider, is the one which moved. Finally, 52 tells us the new slider position. To get an understanding of the protocol, I referred to this table. To identify which physical input was used, I was able to reference this manual which came with the device:

With that figured out, I had code which was being run each time an input was moved. I just needed to use this to change an application's volume within BackgroundMusic.

AppleScript

AppleScript is a (fairly old) scripting language, built in to macOS. The idea is that native applications expose certain functions, which can then be called by scripts written in the AppleScript language. My plan was to package my existing JavaScript code in to an Electron application. This would allow it run shell commands, and consequently, AppleScript!

First, I registered my command in BackgroundMusic's BGMApp.sdef file. This file is used to provide a mapping from AppleScript to Objective-C code. In this case, it already existed, since BackgroundMusic has existing functionality to change output device using AppleScript. For my use case, I appended the following:

<command name="setappvolume" code="setappvo" description="Set volume of app">

<cocoa class="BGMASAppVolumeCommand" />

<parameter name="bundle" code="bndl" description="Bundle of app" type="text"/>

<parameter name="volume" code="volu" description="New volume" type="integer"/>

</command>

This registered a setappvolume command, which I then had to implement in Objective-C.

In my implementation, I loop through every running application, and alter its volume if it matches:

- (id) performDefaultImplementation {

NSString* bundle = self.evaluatedArguments[@"bundle"];

int volume = [self.evaluatedArguments[@"volume"] intValue];

NSArray* apps = [[NSWorkspace sharedWorkspace] runningApplications];

for (UInt32 i = 0; i < [apps count]; i++) {

NSRunningApplication* application = apps[i];

if ([application.bundleIdentifier isEqualToString:bundle]) {

[[AppDelegate appVolumes] setVolume:volume forAppWithProcessID:application.processIdentifier bundleID:bundle];

}

}

return @"OK";

}

The Electron Glue

With BackgroundMusic ready to receive commands, I set up a simple Electron project. When it notices a slider move, it runs the following to alter the volume of the application I associated with the input:

const script = `

tell application "Background Music"

setappvolume bundle "${bundleToChange}" volume ${volume}

end tell

`;

childProcess.exec((`osascript -e '${script}'`));

This worked, so my first prototype was complete!

MIDI in Objective-C

While my prototype was working, there were a few issues. First of all, it was inconvenient to have a seperate Electron application that I needed to run constantly in the background. Second, there was a noticeable lag between when I moved the slider and when my volume changed. Feeling reassured by how things were going, I looked at writing some Objective-C.

To connect to the device, I used the MIKMIDI library. Accessing devices was easy:

[MIKMIDIDeviceManager sharedDeviceManager].availableDevices

I then registered an event handler:

[[MIKMIDIDeviceManager sharedDeviceManager] connectInput:source error:NULL eventHandler:^(MIKMIDISourceEndpoint *eventSource, NSArray *commands) {

The rest of the work was fairly trivial. Similar to what I had done in JavaScript, I worked out which input was changed, and mapped this to a particular application bundle ID. I then called existing BackgroundMusic methods to change application volume, or for one slider, the volume of my entire system.

I did have to make some small changes to BackgroundMusic, as some methods didn't take exactly what I wanted. I would love to open source those in the future, but there is some work there, because I currently have no way of configuring which applications to control, or the layout of your particular MIDI device.

This new, purely Objective-C implementation was much faster. It also works without the need for another application, which means I'm ready to go as soon as my Mac boots up.

Conclusion

This was a fun project, which I'd consider complete. There have been a few instances where BackgroundMusic has crashed, but because this happens very rarely, I haven't investigated yet. I'd like to find some time for that, and if I can find the problem, it would be great to fix. I'm not sure if it's an issue in my code or BackgroundMusic.